Taking Control of Your Code

Learn how to deploy a private, secure GitLab instance using NixOS, Podman, and Nginx. Complete with HTTPS support and a deep dive into home networking.

The Backstory

Before we get into the backstory, if you only want to follow along with the tutorial, you can skip the rest of the Backstory section without issues.

Becoming extremely familiar with cloud technologies is one of the most important things that a Software Engineer can do. Not only does the cloud enable high-velocity startups to scale rapidly as their unicorn takes off, but it also enables large-scale companies to save (or spend) an immense amount of money on their software architecture. In the right hands, cloud architecture can sustain a billion-dollar company with ease. However, in the wrong hands it can destroy you financially. This led to two major obstacles I had for learning the cloud. The first was that I was terrified of hosting an auto-scaling service in the off chance that someone decided to ruin my day (and my credit score for the next seven years) by increasing the usage for my resources beyond the free tier. Second, there are about a million different cloud services. With these two factors combined, there was no chance that I was going beyond a “Hello World” on AWS.

Then, during an internship, I was given the task of becoming familiar with Kubernetes and deploying a relatively simple application to it. Being that I am the type of engineer who likes to fiddle around with things myself, I made the brilliant decision to purchase an old, refurbished Desktop Dell from an office near me and installed Ubuntu on it. My thought process was that I would easily spin up a quick k8s cluster and get to work on deploying anything my heart desired on it. Of course, anyone who has ever tried that can likely guess how that went. I struggled immensely with the entire process, but in the end it did teach me a lot. Then, I set up the home server as a pseudo-Nvidia shield and forgot about it for a year.

Fast forward to a couple weeks ago, and I finally decided to start trying to do something about the recent ethical issues surrounding AI training on GitHub. My idea? Just spin up a quick and easy GitLab on my server and call it a day. Naturally, I forgot to factor in my innate need to scope creep on any project I touch. I went from wanting a GitLab instance I could hit from my Desktop at the IP address to wanting to set up a PiHole to give the GitLab a DNS record, to finally arriving at a reverse proxy (Nginx) handling traffic between GitLab, Adguard Home, and more (so, so much more). While I won’t be sharing the entirety of my setup in this article, what I will be sharing is the minimal setup that I did for the GitLab to run with a signed certificate and HTTPS.

Prerequisites - Your Containers, Your Choice

First, before we begin, there are a couple of things that you need to have at hand to follow along with this tutorial. Let’s go through them one by one.

Hardware

First, you are going to need something to host your GitLab instance on. Since this article is about doing things privately, I’m going to be using a refurbished office Desktop Dell from NewEgg. Personally, I got lucky and bought one with 16GB of RAM and a decent CPU (similar to this one) for just under $100.

Software

Second, I am a huge fan of the Nix ecosystem. While I won’t dive deep into it, if nothing else it is a fantastic way to manage your home configuration on any non-Windows device.

Because of this, I’m going to be using NixOS as my operating system. While it is not necessary to use NixOS, as these commands should generally work on any generic Linux installation, I highly recommend you get out of your comfort zone and try something new if you have a clean machine.

Be aware that I am going to tailor this tutorial to NixOS.

Experience

Lastly, I love using NeoVim, Tmux, and Zsh. Because of this, I’m going to assume that you are relatively familiar with terminal-based configurations. You do not need a deep knowledge of any specific tools, but you should be able to follow along with basic commands and be able to edit files.

Certificates

To enable HTTPS, you will need to either get a certificate signed by a trusted authority or use a self-signed certificate. Personally, I ended up setting up a signed certificate using Let’s Encrypt (the process will be shown below) but be warned that this requires you to own a public domain. Alternatively, the self-signed option is certainly a viable (and free) one.

The Tutorial

Assuming that you have all of the prerequisites, let’s get started.

Container Runtimes

The first thing we need is a container runtime. Broadly speaking, there are two large players in the container space: Docker and Podman. Docker is the more popular of the two and is the gold standard for containerization, but Podman more easily supports some workflows (like running containers rootless). In theory, the two should be almost entirely interchangeable, but I will be using Podman.

To start using Podman for our container runtime, we only need to add a few lines to our /etc/nixos/configuration.nix file:

virtualisation = {

# Enable containers

containers.enable = true;

podman = {

# Enable podman

enable = true;

# Create a `docker` alias for podman, to use it as a drop-in replacement

dockerCompat = true;

# Required for containers under podman-compose to be able to talk to each other.

defaultNetwork.settings.dns_enabled = true;

};

};

# ...

environment.systemPackages =

[

# ... rest of packages

pkgs.git

pkgs.neovim

pkgs.podman-tui

pkgs.podman-compose

pkgs.compose2nix

];

# ...

# make sure to add yourself to the groups below, you may not need all of them, but it shouldn't hurt to add them all

users.users.myusername = {

isNormalUser = true;

description = "My Username";

extraGroups = [ "networkmanager" "libvirtd" "docker" "podman" "nginx" ]; # this may not be the same as you, feel free to only add the gruops here and not remove any

packages = with pkgs; [

firefox

];

shell = pkgs.zsh;

};

# ...While it is not necessary to install podman-tui, podman-compose, and compose2nix, I found them quite useful for debugging or checking my work. Make sure to check them out and see what they do if you’re curious! After modifying the configuration on NixOS, don’t forget to run sudo nixos-rebuild switch to sync your system to the new settings.

If you are using NixOS and you create containers through the configuration file, you will need to use

sudoto run the podman commands, such assudo podman ps.

If you are not using NixOS, you will likely need to follow the official documentation for each of these tools to get them installed, though you can most certainly leave out compose2nix as it is purely for Nix. In the past, I have also found great luck installing Podman Desktop and letting it help set up everything related to Podman including the podman, podman-compose, and the docker compatibility.

At this point, you should be able to run podman ps and not see any errors.

If you chose to use Docker at this point, you can just replace

podmanwithdockerin all of the commands.

At this point, we can go ahead and set up some containers! Let’s start with the GitLab container:

# open the firewall up for our ports, make sure to update this in the future as you use other ports!

networking.firewall.allowedUDPPorts = [

80

443

22

];

networking.firewall.allowedTCPPorts = [

80

443

22

];

# ...

virtualisation.oci-containers.containers.gitlab = {

autoStart = true;

image = "gitlab/gitlab-ce:latest";

environment = {

GITLAB_OMNIBUS_CONFIG = "external_url 'http://localhost/';";

};

volumes = [

"gitlab-data:/var/opt/gitlab"

"gitlab-config:/etc/gitlab"

"gitlab-logs:/var/log/gitlab"

];

ports = [

"80:80"

"443:443"

"22:22"

];

};Before you go ahead and rebuild, let’s talk a bit about what is going on here. Experts of the compose syntax may quickly notice that the NixOS configuration is very similar to compose. You can even see exactly where I based this configuration off of by going to the offical GitLab docs. Make sure you understand what is happening, as understanding this will be super useful going forward.

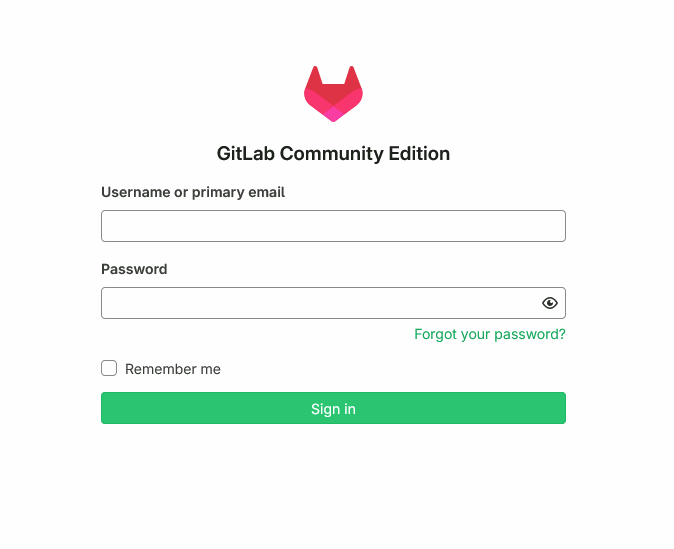

Now if you rebuild your system, you should be able to access your GitLab instance at localhost:80. This is amazing! If you access it from another device on your network, you should even be able to go to http://Server IP Address:80 and see the same login page! However, there’s still a couple issues with this solution. First, we are doing everything over HTTP, and don’t have any way of securing our connection (you likely even had to go through a page that said your server is insecure or could be a malicious actor). Second, unless you have a static IP, your computer’s IP address will likely change and become impossible to keep track of. To resolve these issues, let’s discuss networking!

Networking

Networking is a huge part of any system. It powers the internet, and, by extension, the world. In this section, we are going to work on setting up a networking stack that will allow us to access our GitLab instance. First, let’s make sure that the basic networking is available on our configuration.nix. We are looking to accomplish a few goals here. First, we want to be able to access this GitLab server at a given IP address. If the IP address changes, that would complicate the process of actually finding the server. Because of this, we need to set up a static IP address for our server. On top of this, I use a bridge for my network as I also run a VM on this server that has a separate IP address:

networking.useDHCP = false;

networking.bridges.br0 = {

interfaces = [ "enp2s0" ]; # replace this with your network interface which can be found with `ip link show`, NOT lo as that is the loopback interface

};

networking.interfaces.br0.ipv4.addresses = [{

address = "192.168.1.5"; # change this to your IP address, make sure that it is one that your router will not automatically assign

prefixLength = 24;

}];In the above snippet, we are disabling DHCP (the automatic IP address assignment) and setting up a static IP address for our server. Remember this IP address, as it will be useful going forward. After running sudo nixos-rebuild switch once more, you should still be able to access the internet and go about your day without issue. If you cannot, make sure that your IP is not being assigned to a second device by your router.

Now that you have a static IP address, you should be able to access the GitLab instance at your given IP address that you set up from any device on your network (provided you allow it through your firewall)!

HTTP vs HTTPS and Some Obstacles (overview of HTTPS/web security in relation to Git)

Now we get into the fun part. Let’s discuss a small issue that is easily resolvable, but may seem difficult at first. I highly recommend sticking with me through this section if you arne’t super familiar with networking or how the internet works in the modern day.

Generally, when you go to a webpage you type in the name and it just appears. On the backend, there’s a process that gets you there that goes something like this:

Client -> Server:80 (server at port 80)- You type in “example.com”

- The computer finds the IP address by checking the DNS records for “example.com -> IP Address”

- The computer then goes to the IP address and looks for the webpage on port 80.

This is a massive oversimplification, but is the basic idea behind HTTP. However, in recent years, the internet has moved to adopting HTTPS, which is a slightly different process that allows for encryption. In HTTPS, the process is as follows:

Client -> Server:443 (and back again, with a handshake as mentioned below)- You type in “example.com”

- The computer finds the IP address by checking the DNS records for “example.com -> IP Address”

- The computer then goes to the IP address and looks for the webpage on port 443.

- The computer then checks the certificate that is presented by the server.

- The computer then checks the certificate to make sure that it is valid (signed by a trusted authority, not expired, etc).

- The client/server then do a handshake and establish a secure connection between them (this is more complex, but we don’t need to go into that here).

Now let’s talk about what this means for us.

Certificates and You

Normally, HTTP is somewhat acceptable to use on a secure private network, but HTTPS is much more secure. If you were only using HTTP, people could potentially intercept your traffic and read it. On top of this, without having a certificate signed by a CA (trusted authority), it’s difficult to tell if the website is really who they are saying. Since DNS is often resolved by the router you are connected to, someone could theoretically put up a network (eg, McDonald’s Free Wifi) and when you connect to it and go to a website they could have changed the DNS records on the router to say “mybank.com” -> my malicious IP address.

This presents a massive problem for security, and not just for browsing the internet. DNS is also used for some other things, including the backend of Git. When you go to clone a repository and use either HTTP or SSH, either way the name of the server is still resolved using DNS. This means that if someone did the above “man-in-the-middle” attack (setting up their own server and pretending to be your server), they would be able to force you to clone malware instead and your computer would accept it willingly.

Because of this, Git will refuse to clone a repository if the server does not have a valid certificate. In our case, this means that we need to get a certificate signed by a trusted authority for our GitLab instance. While we won’t go about getting a certificate just yet, I want you to be broadly aware of how this process works.

Nginx

On top of all of this, we have another issue. When we want to go ahead and host a second webpage (eg, a blog, pihole, adguard home, etc), we ideally will still want to be able to use port 80 to access it. This should set off alarm bells in the networking side of your brain as we are already using the port 80, and we would have a conflict! So, to resolve this, we need what is called a “reverse proxy” (this is what Nginx is, and luckily NixOS makes it extremely easy to set up).

First, when we want to host a webpage it is common to assume that we are going to be using port 80. Due to this, many containers will specifically use port 80 to host their webpages internally. Then, users will map the port of the container to their host so that when someone goes to your IP address in their web browser, it will do the following:

Client -> Server:80 -> Container:80But what if we want to have multiple webpages running on the same server? If we have multiple containers, we can’t just map the ports as they will conflict. In our case, this is where Nginx comes in. Nginx listens on the ports instead of the containers, and then whenever it receives a request it looks at the hostname (what the client entered in their browser, for example “example.com”) and then it dynamically decides which container to send the data to:

Client -> Server:80 -> Nginx:80 -> Container1:80 if the hostname is "container1.example.com"

-> Container2:80 if the hostname is "container2.example.com"This is great, as it allows us to have as many containers as we want as long as they have separate hostnames, and to set the hostnames up we simply need to configure the DNS records and Nginx.

So, before we get too far ahead of ourselves, let’s go ahead and set up Nginx.

First, let’s go ahead and unmap our GitLab container ports so that it doesn’t conflict with Nginx:

virtualisation.oci-containers.containers.gitlab = {

autoStart = true;

image = "gitlab/gitlab-ce:latest";

environment = {

GITLAB_OMNIBUS_CONFIG = "external_url 'http://localhost/';";

};

volumes = [

"gitlab-data:/var/opt/gitlab"

"gitlab-config:/etc/gitlab"

"gitlab-logs:/var/log/gitlab"

];

# removed the ports here

};Next, we can go ahead and add the Nginx configuration:

services.nginx = {

enable = true;

recommendedProxySettings = true;

recommendedTlsSettings = true;

virtualHosts."gitlab.mywebsite.com" = {

locations."/" = {

proxyPass = "http://10.88.0.19:80/"; # what should this be?

proxyWebsockets = true;

};

};

};But we encountered an issue! What Nginx let’s us send the traffic to our container, but what IP address should we send it to? To figure this out, go ahead and run the following command:

sudo podman inspect -f '{{range.NetworkSettings.Networks}}{{.IPAddress}}{{end}}' gitlab # this is the name of the container!This will show us the IP address of the container. In my case, this is 10.88.0.19.

If you have issues with this, run

sudo podman network listto make sure that your bridge is visible and set up correctly (you should seepodmanin the list)

On top of this, we can even go ahead and ping the container from the host (make sure this gets a response using the IP address you got from the previous command):

ping 10.88.0.19The reason this works is because the container is running inside of our machine, but if we were to run this command from another device on our network, it would not be able to reach the container.

One other quick thing to note is that the container is NOT guaranteed to have the same address every time you run it. To make sure it gets a static IP address, let’s modify our gitlab container configuration to be the following:

virtualisation.oci-containers.containers.gitlab = {

autoStart = true;

image = "gitlab/gitlab-ce:latest";

environment = {

GITLAB_OMNIBUS_CONFIG = "external_url 'http://gitlab.mywebsite.com/';";

};

volumes = [

"gitlab-data:/var/opt/gitlab"

"gitlab-config:/etc/gitlab"

"gitlab-logs:/var/log/gitlab"

];

extraOptions = [

"--net=podman"

"--ip=10.88.0.19"

];

};Now you should be able to rebuild your system and ping the container from the above IP address using this command:

ping 10.88.0.19if you are having issues with this, make sure that the IP address you’ve chosen is within the range allowed by your bridge. I didn’t delve into this, so if you have issues and find an answer, let me know and I’ll add the solution here.

Great! Also, notice that we do not need to map the ports anymore, as we are using the IP address of the container for Nginx instead of the host’s ports mapped directly to the container.

At this point, you should be able to access your GitLab instance once again by using localhost:80 in your browser.

Cloning your First Repository? (or not!)

Now that we have everything set up and working, let’s go ahead and clone our first repository! By default, GitLab will not allow you to sign up for a new account without approval since it’s a security risk, so go ahead and register your account and then login with the root user using the method below:

sudo docker exec -it gitlab grep 'Password:' /etc/gitlab/initial_root_passwordNow you should be able to go ahead and log in with the username root and the password you got from the previous command.

This password file is deleted after 24 hours (and a restart) of the container, so you should save it somewhere safe.

Once you are logged in, you should be able to go ahead and approve your account.

Now, let’s clone our first repository! You should be able to do this by going to the “New Project” button and filling out the form. Go ahead and have it create a README.md file so that we have something to clone and then click “Code” > “Clone with HTTP” (or SSH, as I prefer).

Now go ahead and run your clone command and you might notice something odd.

It’s not cloing the repository! Instead, it’s saying that there’s a self-signed certificate and erroring out.

At this point, you can realistically disable the self-signed certificate warning, but I wouldn’t recommend it due to the security risks. Instead, let’s go ahead and fix our setup to allow the use of self-signed certficates with our Nginx.

DNS and More!

Now we have a clear issue with our setup. We need to go ahead and fix our Nginx configuration to allow the use of self-signed certificates. To do this, we need a few things. First, we need to set up a DNS server to resolve hostnames to IP addresses. Personally, I use Adguard Home for this, so I will showcase the setup for it - however, you should be able to do this with any number of tools. Some other options are pihole (which is extremely similar to Adguard Home in terms of setup), Dnsmasq (which Pihole uses on the backend), and Unbound. Some people even prefer to use Unbound combined with Pihole, though I found that process to be overly complex for what I was originally going for, and I really liked the adblocking that Adguard Home provides, not to mention that it supports DNS over HTTPS which just improves our security that much more.

If you are up for using Adguard Home, you can go ahead and add it to your configuration as a container:

virtualisation.oci-containers.containers.adguardhome = {

autoStart = true;

image = "adguard/adguardhome:latest";

volumes = [

"adguardwork:/opt/adguardhome/work"

"adguardconf:/opt/adguardhome/conf"

];

environment = {

TZ = "America/Chicago";

FTLCONF_dns_listeningmode = "all";

FTLCONF_webserver_api_password = "password"; # make sure to change this! in reality, you shouldn't even store this in plaintext, personally I use a password manager for this

};

ports = [

"53:53"

"3000:3000"

];

extraOptions = [

"--net=podman"

"--ip=10.88.0.20"

];

};Note that we need to use port 3000 at first, but we can disable it after setting up the Adguard Home and switch it to port 80 with Nginx. On the other hand, port 53 is used for the DNS, so we should keep that port mapped.

Now, let’s go ahead and connect it to our Nginx setup so that we can access it from our browser with our server’s IP address:

services.nginx = {

enable = true;

recommendedProxySettings = true;

recommendedTlsSettings = true;

virtualHosts."gitlab.mywebsite.com" = {

locations."/" = {

proxyPass = "http://10.88.0.19:80/"; # what should this be?

proxyWebsockets = true;

};

};

virtualHosts."adguard.mywebsite.com" = {

locations."/" = {

proxyPass = "http://10.88.0.20:80/"; # what should this be?

proxyWebsockets = true;

};

};

};After rebuilding, first go to your Your Server IP:3000 or localhost:3000 to go through the initial Adguard setup, and then you should be able to disable the 3000 port and use port 80 now.

At this point, you should be able to configure your DNS server (these are called DNS rewrites in Adguard Home) to resolve gitlab.mywebsite.com to your GitLab server’s IP address and adguard.mywebsite.com to your Adguard Home server’s IP address (change mywebsite to whatever domain you own/want to use).

To do this do the following:

Go to Filters > DNS rewrites > Add DNS rewrite and then add the following:

Domain: *.mywebsite.com (where this is your domain, or you could do *.something.lan if you don’t have a domain)

IP Address: Your Server IP Address (this is what you set the static IP address as earlier)

Now, after configuring either your router to use the DNS server (you can set the dns to your server’s IP address) or, if your router doesn’t support DNS, your individual devices, you should you be able to go to gitlab.mywebsite.com (or whatever domain you chose) on other devices!

if you are having problems with this, make sure that your firewall is allowing the requests to go through your server!

Now, we can finally go ahead and get HTTPS working to enable us to access our GitLab instance securely over our network.

Certificates Galore

Let’s talk briefly about what a certificate is, and how we can get one. First, if you do not want to use a CA (Certificate Authority) to sign your certificate (which requires you to own the domain you are using publicly, such as on Cloudflare) you can absolutely self-sign a certificate and set up your devices to trust it manually. If you prefer that, this is one guide that covers that process (though there are many more): https://tecadmin.net/step-by-step-guide-to-creating-self-signed-ssl-certificates/

Once you have a self-signed certificate, you can skip the rest of this section down to where we set up Nginx to use your certificate.

On the other hand, if you are like me and want to use a CA to sign your certificate (to prevent extra configuration on other devices), keep reading!

Certificate Authorities

Broadly speaking, the goal of a certificate authority is to guarantee that you are both the owner of the domain (for example, mywebsite.com) AND that you are the owner of the server. To do this, we use something called challenges. The easiest and most common challenge is HTTP-01. Essentially, all you need to do for this one is to put a token in a publicly accessible spot on your website. This is super easy to automate, and is therefore a great method if you are hosting an internet-facing application.

The problem with this, however, is that it requires you to have a publicly accessible website. For this to work, you need to be able to do a port forward with your router, meaning that your router would expose a port on your server to the internet. Since the goal of this was to have GitLab entirely local, and port forwarding inherently introduces some risk (though it can certainly be done safely), I decided to not use this challenge.

While you can look through the rest of the challenges, there is one more that is perfect for our use case - DNS-01. For this challenge, all you need to do is add a TXT record to your public DNS server with a given token (instead of putting the token on your website, which is what the HTTP challenge does).

So how do we get the token? What do we need to do?

Generally, people will use an ACME client to do this. In our case, I highly recommend using acme.sh. This is a fairly lightweight shell script that will automatically handle adding the DNS record, asking Let’s Encrypt to check it, and then creating the certificate file. It even lets you automate this process easily!

For NixOS, all you need to do is add the following package to your configuration:

environment.systemPackages =

[

# ... rest of packages

pkgs.acme-sh

];After rebuilding, you should be able to run acme.sh --help without any errors.

Now, the steps change slightly depending on what service you own your domain with. Personally, I will be using Cloudflare, but you should be able to find your own provider here: https://github.com/acmesh-official/acme.sh/wiki/dnsapi

In my case, I needed to create an API token for my domain on Cloudflare (to let acme.sh automatically configure the DNS record on my behalf using the Cloudflare API).

Next, I set the following environment variables:

export CF_Email="[email protected]"

export CF_Key="my-api-key"Now, personally, I like to use Let’s Encrypt for this process so I set them as the default CA:

acme.sh --set-default-ca --server letsencryptFinally, I can run the following command to let acme.sh do the heavy lifting of adding the token and checking it, and then creating the certificate files:

acme.sh --issue -d mywebsite.com -d '*.mywebsite.com' --dns dns_cf --forceThis creates a wildcard certificate, meaning it is valid for any subdomain of mywebsite.com and mywebsite.com itself. This is fine for testing, but is unnecessarily permissive. For the utmost safety, narrow the scope of this certificate to only the subdomains you want to use.

Now, you should see acme.sh create the certificate files and let you know where they are.

Now that we have our certificate, all we need to do is set up Nginx to use our new certificate, so make a note of where all the files are.

Configuring Nginx for HTTPS

Luckily for us, NixOS + Nginx makes configuring HTTPS a breeze. First, let’s copy the certificate and key files to a directory that Nginx can access. In my case, I did the following:

cp ~/.acme.sh/mywebsite.com_ecc/fullchain.cer /etc/nginx/ssl/fullchain.cer # this is the certificate, your path is likely different

cp ~/.acme.sh/mywebsite.com_ecc/mywebsite.com.key /etc/nginx/ssl/mywebsite.com.key # this is the key, your path is likely differentAll we need to do is add the following to our configuration:

services.nginx = {

enable = true;

recommendedProxySettings = true;

recommendedTlsSettings = true;

virtualHosts."gitlab.mywebsite.com" = {

# these three enable HTTPS

forceSSL = true;

sslCertificate = "/etc/nginx/ssl/fullchain.cer";

sslCertificateKey = "/etc/nginx/ssl/mywebsite.com.key";

locations."/" = {

proxyPass = "http://10.88.0.19:80/";

proxyWebsockets = true;

};

};

virtualHosts."adguard.mywebsite.com" = {

# these three enable HTTPS

forceSSL = true;

sslCertificate = "/etc/nginx/ssl/fullchain.cer";

sslCertificateKey = "/etc/nginx/ssl/mywebsite.com.key";

locations."/" = {

proxyPass = "http://10.88.0.20:80/";

proxyWebsockets = true;

};

};

};Now you should be done! You can go ahead and access your GitLab instance securely over any device using your DNS on your network! Following the previous steps to clone your repo should now work!

Conclusion

Whew. That was a lot of learning condensed into a short blog post. I don’t know about you, but I struggled through this process my first time. Honestly, this was one of my favorite projects that I’ve done in a very long time. Not only did it teach me a ton about Linux as a whole (and many of the services that we use on a daily basis and take for granted) but it also greatly boosted my understanding of the cloud as a whole. Not to mention the increased privacy and security of self-hosting, and for relatively cheap is a great bonus!

I hope you enjoyed this article and learned something new. Let me know if you have any questions or comments below! If you are having issues I can almost guarantee that someone else will have the same questions and passing the solution along via the comments is a great way to save everyone the headache you may have just had.

Also, let me know if there’s any other things you’d like me to cover in a future article, and I’d be happy to look into them!

Happy Coding!

-Nicholas